Carpetbaggers and Disruption’s Human Costs

Tesla's autopilot is awesome, but Tesla and other 'gig economy' employers are playing risky games with people's lives and livelihoods.

Update (July 06, 8:00PM): Someone pointed out Tesla’s rebuttal to a Fortune article which presents different information about the timeline of Joshua Brown’s death. The only date I have referred to below is June 30, the date of Tesla’s press release, while it appears the accident itself occurred on May 7.

I will write more tomorrow about the new information both articles present, as well as a response to some questions about my position on innovation, ‘autopilot’ and technology.

On June 19, the actor Anton Yelchin was killed in a rollover accident by his Jeep Grand Cherokee. That vehicle, it turns out, had been recalled back in April because owners had difficulty using the “monostatic” gear shift in the car. Here’s a photo of the shifter on Flickr, along with some more documentation of the recall.

The issue central to the recall was that Chrysler Fiat had received several complaints of drivers exiting the car without placing it in park. In order to understand just how bad the shifter design is, and how easy it is to make such mistake, take a look at this Youtube video from 2012 demonstrating how to use the new shifter as introduced in the 2013 Dodge Challenger.

Pay close attention around 0:26, where the demo driver pushes, then holds the shifter until it moves from D to R then finally P. By the release of Mr. Yelchin’s Jeep, some refinement of that delay appears to have been introduced, as shown in the operating video for it’s shifter.

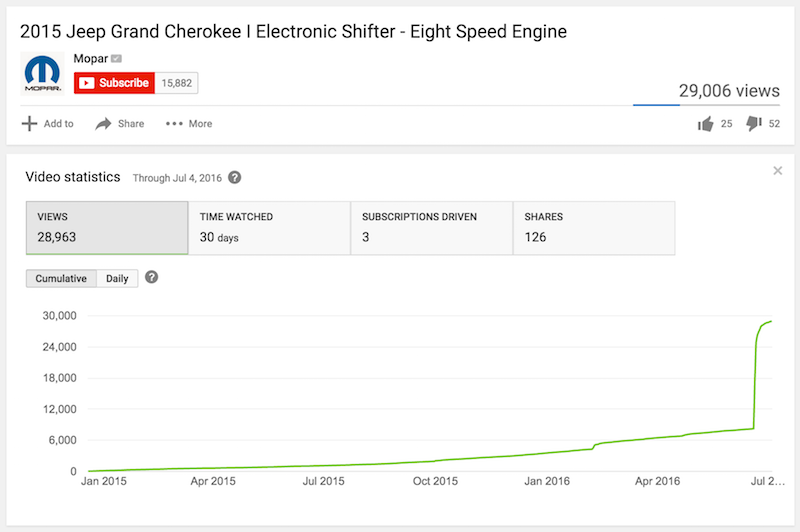

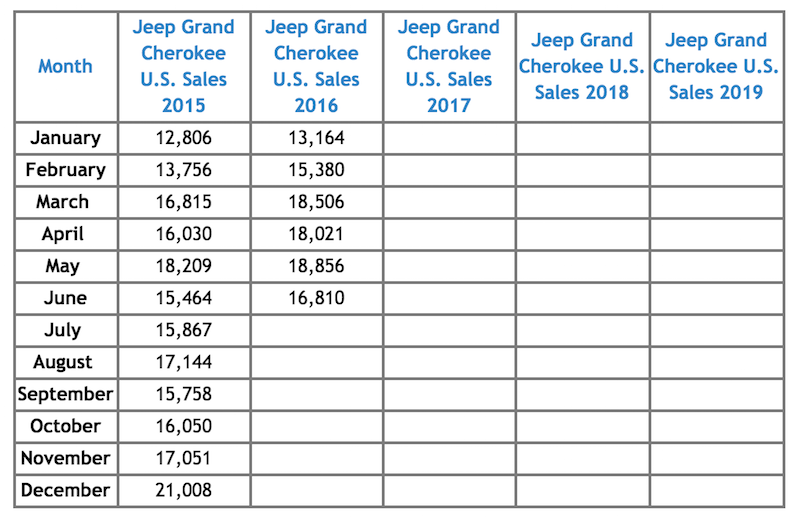

Given that the recall involves 1.1M vehicles worldwide (800K in the U.S.), it is likely not every owner watched these videos, meant to augment, and in some cases, replace a printed owner’s manual. For example, prior to Mr. Yelchin’s accident, the shifter video for that vehicle had been viewed roughly 8,000 times (roughly 20K views since).

Given sales of 195,958 Grand Cherokees in 2015 it seems unlikely that all owners, much less all drivers, had viewed the relevant video.

At any rate, the video demonstrates how easily the vehicle may accidentally be placed in reverse, or how a driver may inadvertently not complete a shifting operation. It is just as easy to see how an analog shifter — one with immediate physical feedback — would likely help avoid such a mistake.

Furthermore, the design of this shifter appears to be the worst kind of design—the kind of design that tarnishes the public’s view of design—design for design’s sake.

- Does this shifter design enable something a more traditional shifter does not?

- Is this design required by the mechanics of the transmission?

- Is there a measurable improvement to the vehicle because of this design?

- Is the cost of re-training driver’s behavior worth the tradeoff in design relative to traditional shifter?

When ‘flappy-paddle’ shifting was introduced on some high end vehicles such as the Ferrari F355 (1997), they had already been in use in Formula One since the late 1980’s. Loads of kinks had been worked out before introducing the design and gearbox to consumers. It does not appear to be the case with the Chrysler Fiat design.

Indeed, even for Ferrari, early designs were not considered adequate. In the F355, a driver had to operate both paddles simultaneously, then select from a menu of park, reverse or neutral. As this was still perhaps prone to error if the wrong option was selected, models such as the Ferrari California made reverse an explicit choice by including a large ‘R’ button on the center console.

In a statement date June 30, Tesla motors acknowledges the death of Joshua Brown, a Tesla owner and enthusiast who died while his car was using the car’s ‘autopilot’ feature. It is the first fatality in a Tesla with ‘autopilot’ activated, and Tesla is quick to trot out lots of numbers and statistics in an attempt to diminish the public relations impact of the accident.

Since ‘autopilot’ officially launched, Tesla has distanced itself from culpability by stating, “The driver is still responsible for, and ultimately in control of, the car.” The Guardian and others have underscored this position in their reporting:

Tesla is very clear about the fact that the driver is responsible for the car at all times and should be actively in control, despite the AutoPilot system: it will be the driver’s fault, not Tesla’s if the car ends up in a road traffic collision.

But I challenge you to interpret Tesla’s thirteen words (of 409) as a clear, legally binding commitment in the context of the ‘autopilot’ press release:

Tesla Autopilot relieves drivers of the most tedious and potentially dangerous aspects of road travel. We’re building Autopilot to give you more confidence behind the wheel, increase your safety on the road, and make highway driving more enjoyable. While truly driverless cars are still a few years away, Tesla Autopilot functions like the systems that airplane pilots use when conditions are clear. The driver is still responsible for, and ultimately in control of, the car. What’s more, you always have intuitive access to the information your car is using to inform its actions.

Especially when surrounded by statements about how the functionality will take care of tedium and danger, this paragraph feels more like an invitation to turn on ‘autopilot,’ kick back, and surf the web all the way from your house to your office than an advisement to exercise caution and restraint. [1]

However, as far back as October 2014, legal experts and policymakers had begun debating Tesla’s legal responsibility, or that of any autonomous or enhanced vehicle manufacturer. Seemingly in contrast to Tesla’s distancing itself from legal culpability, as David Snyder states for this 2011 Wired article (where “bring in” refers to incorporating the manufacturer in legal proceedings):

The driver is presumed to be in control of his or her vehicle, but if the driver feels that there have been some facts supporting the notion that the equipment caused in whole or in part the accident, that driver would probably bring in the manufacturer of the equipment.

That Wired piece discusses both autonomous driving, as well as innovations that assist drivers, but in both cases it is fair to say that legal issues around who is at-fault, who is responsible for damages and much more, are far from settled.

When one of Google’s autonomous test vehicles caused a minor accident back in February, lots of reporting was focused on confluence of human errors in judgement that led to the accident. Google itself seemed to come down more on the side of human error, while timidly acknowledging “some” responsibility:

In this case, we clearly bear some responsibility, because if our car hadn’t moved there wouldn’t have been a collision. That said, our test driver believed the bus was going to slow or stop to allow us to merge into the traffic, and that there would be sufficient space to do that.

Subsequent reporting shows how murky the waters are when it comes to the determination of responsibility:

The current law means that if a self-driving car crashes then responsibility lies with the person that was negligent, whether that’s the driver for not taking due care or the manufacturer for producing a faulty product.

Is the driver responsible for not intervening? Is Google responsible because it’s software failed to account for this scenario? Is it even possible to account for all scenarios, so long as human drivers still drive alongside autonomous vehicles? What about when a child dives in front of an autonomous vehicle that cannot stop in time? What about when an autonomous vehicle is hacked? Is a death caused by an autonomous vehicle “manslaughter” in the traditional sense?

Clearly, there is ground to cover.

Prior to Mr. Brown’s fatal crash, drivers of Tesla vehicles have experienced and reported some dicey moments while on ‘autopilot’ that point more to the software and hardware than human negligence. But, to varying degrees, drivers are making the case for fully computer-controlled autonomy over human intervention with their actions.

A positive experience with Tesla’s ‘autopilot’ as early as October of last year has a team of drivers crossing the U.S. in record time, earning a post-hoc endorsement from Elon himself.

Congrats on driving a Tesla from LA to NY in just over two days! https://t.co/hjAnAAIdMB

— Elon Musk (@elonmusk) October 21, 2015

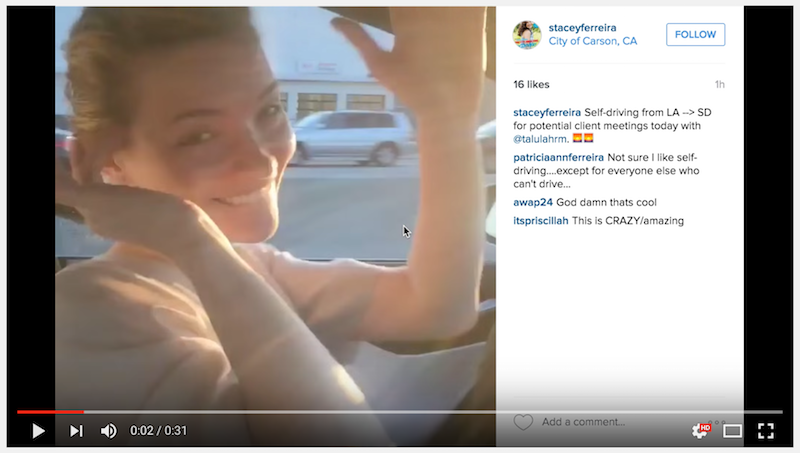

However, drivers have also continued to abuse what ‘autopilot’ can or should do by posting videos of what is often very … reckless … behavior online. [2]

In the days since the crash it has been reported that Mr. Brown was watching a Harry Potter DVD at the time of his crash, though it would be irresponsible not to mention that much about the crash is still under investigation.

To be fair, as stated in Tesla’s press release regarding Mr. Brown’s accident, the system will demand ‘hands-on’ contact with the wheel every so often, which is difficult to discern in the videos above.

The system also makes frequent checks to ensure that the driver’s hands remain on the wheel and provides visual and audible alerts if hands-on is not detected. It then gradually slows down the car until hands-on is detected again.

I am of two minds here.

It is abundantly clear that the behavior of some drivers is irresponsible, reckless and endangers the lives of others on the road, as much as their own. Though the actions of a few cavalier Tesla owners should not condemn them all, it would be difficult to see how such behavior does not lead to further injuries or fatalities.

On the other hand, given the tone of Tesla’s initial press release for the launch of ‘autopilot’, and the company’s half-hearted tsk-tsk-ing of those using ‘autopilot’ recklessly, it is easy to see why Mr. Brown and others continue to experiment with their cars.

Do we blame the driver that receives a ticket (in the third video above) when he does not manually intervene as his Tesla cruises along at 75 in a 60 zone? Has Tesla truly ensured driver safety in this case? Does Tesla care more about optimizing travel time over human safety (both in the vehicle and around it) when it seems ‘okay’ to ignore certain laws?

Here’s my issue.

Allowing big corporations to maintain a laissez-faire attitude with regards to their culpability for human lives is dangerous.

Google’s AV operators are presumably well informed about the risks and responsibilities that come with heir job before they ever set foot in a car. Thus the millions of miles and tens-of-thousands of hours of that Google vehicles have been on the road, gathering data, are a formal part of someone’s job.

In contrast, Tesla’s 130 million miles of ‘autopilot’ data is gathered from driver-owners of the vehicles. Presumably owners get info about this at purchase. While all of that data is analyzed and fed back into the decision-making matrix used by ‘autopilot,’ it serves Tesla more than it does any individual owner.

Put more clearly: Tesla owners are de facto guinea pigs for ‘autopilot’ versus Google employees who are compensated for their time and whose job it is to test and gather data.

Chrysler Fiat’s rollout of a shifter design that clearly caused well-documented confusion is a similar example of disregard for consumer well-being.

Certainly their factory engineers and testers logged hundreds or thousands of hours on test vehicles, but those folks are trained users, operating the equipment day in and day out. Offloading user manuals to Youtube videos seems like another slippery way to meet some abstract legal requirement for documentation; a place to point to when something goes wrong, with a “Well, we did post a video on xyz.”

It doesn’t matter if a company is based in San Francisco or Detroit: Innovation for innovation’s sake, or ‘disruption,’ without accountability is something we need to take a long hard look at.

Autonomous vehicles are just one small part of this big picture. There are other easily observed scenarios where people’s lives, or livelihoods, are easily taken advantage of by companies that are keen on ‘disrupting’ some aspect of society:

- Uber, Lyft and other ridesharing companies that force costs onto drivers while treating drivers as contractors to avoid paying employee benefits such as healthcare, etc. Those same rideshare companies also face scrutiny over not compensating riders’ in legal or accident claims.

- Airbnb and other home-rental services face mounting legal pressure over zoning laws, licensing and other legal challenges similar to ridesharing companies. Cities like Seattle are considering limiting rentals within the city, which can adversely affect those who have built up business around renting homes, even when they comply with current law, pay taxes, etc. Further, Airbnb has manipulated statistics in order to paint a more favorable view of the company, particularly after large PR headaches such as this one where an Airbnb ‘super host’ is inexplicably and unceremoniously removed from the platform.

- Theranos, a biotech startup once valued at $9B, is now under Congressional scrutiny for false claims that it could detect hundreds of diseases from a single drop of blood. In it’s letter, the Congressional Committee writes: “Given Theranos’ disregard for patient safety and its failure to immediately address concerns by federal regulators, we write to request more information about how company policies permitted systemic violations of federal law.”

- Solyndra, a solar company that lied to secure $500M in federal money, may not have directly harmed any given individual, but that money comes straight from U.S. taxpayers as part of a larger incentive for green energy.

Fast Company summed up these legal woes and more, in a piece aptly titled, The Gig Economy Won’t Last Because It’s Being Sued To Death.

The common theme in all of this is that very large, venture-backed (save one of the above) startups are prioritizing profit above all else. Often it is the users—members, drivers, renters, owners—that bear the brunt of hardship, loss, and legal fallout, when companies go head-to-head with governments and existing market forces.

In one sense, we cannot place blame on them, since venture capital demands a high rate of return in exchange for cash injections. But in many practical ways, as well as conceptual ones, these companies are doing very real damage to the very people they rely on for their data, their products and indeed their very success.

In a traditional employer/employee relationship there are well-established protections for both parties. As progress continues, it is clear that keeping legislation apace with innovation is impractical. But to avoid a world run by a new kind of carpetbagger, we will have to examine what it means to be an employee, to be a consumer, to be a citizen, and to have rights. And we’ll have to do it fast.

[1] One other thing worth noting, the comparison in this press release to the autopilot feature on large aircraft is a bit unfounded. Pilots of major commercial aircraft (those on which autopilot systems are found) must log 1,500 flight hours before they are eligible for hiring by U.S. airlines, and 1,000 hours before being allowed to captain a flight. Obviously no similar training is required for operating an autonomous or partially autonomous automobile.

[2] All those videos via this Guardian article

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Email